- What is Data Security Posture Management? DSPM Guide

- How DSPM Is Evolving: Key Trends to Watch

- DSPM Market Size: 2025 Guide

- How DSPM Combats Toxic Combinations: Enabling Proactive Data-Centric Defense

- What Is a Data Flow Diagram?

- What Is Data Exfiltration?

- What Is Data Movement?

- What Is a Data Breach?

- DSPM Tools: How to Evaluate and Select the Best Option

- How DSPM Enables XDR and SOAR for Automated, Data-Centric Security

- What Is Data-Centric Security?

- What Is Unstructured Data?

- What Is Data Access Governance?

- DSPM for AI: Navigating Data and AI Compliance Regulations

- What Is Data Discovery?

- What Is Structured Data?

- What Is Shadow Data?

- How DSPM Enables Continuous Compliance and Data Governance

- What Is Data Classification?

- DSPM Vs. CSPM: Key Differences and How to Choose

- What Is Cloud Data Protection?

What Is Data Detection and Response (DDR)?

Data detection and response (DDR) is a technology solution designed to detect and respond to data-related security threats in real time. It focuses on monitoring data at its source, which allows organizations to identify threats that might not be detected by traditional infrastructure-focused security solutions. DDR continuously scans data activity logs, such as those from AWS CloudTrail and Azure Monitor, to identify anomalous data access and suspicious behaviors indicative of potential threats. Once a threat is detected, DDR triggers alerts to notify security teams, enabling swift response to contain and mitigate the threat.

Data Detection and Response Explained

At the heart of a DDR solution are advanced data analytics and machine learning algorithms that continuously monitor and analyze vast amounts of data generated by an organization's cloud services, networks, and applications.

These powerful analytical capabilities enable the DDR solution to detect anomalies, vulnerabilities, and suspicious activities in real time. By leveraging predictive and behavioral analytics, DDR systems can often identify threats before they cause significant damage. Once a potential threat is detected, the DDR process shifts into the response phase, a series of predefined and automated actions designed to contain and neutralize the threat.

Response actions may include blocking suspicious network traffic, isolating infected devices, updating security policies, or triggering alerts to security teams for investigation and remediation. The effectiveness of a DDR system lies in its ability to integrate with security tools and technologies for a comprehensive and coordinated approach to cloud data security.

Continuous Is Key to DDR

Importantly, DDR isn’t a one-time implementation. Data detection and response is an ongoing process that requires continuous monitoring, threat intelligence gathering, and updating of response protocols. As the threat landscape evolves and new attack vectors emerge, organizations must regularly review and refine their DDR strategies to ensure they remain effective and adaptable.

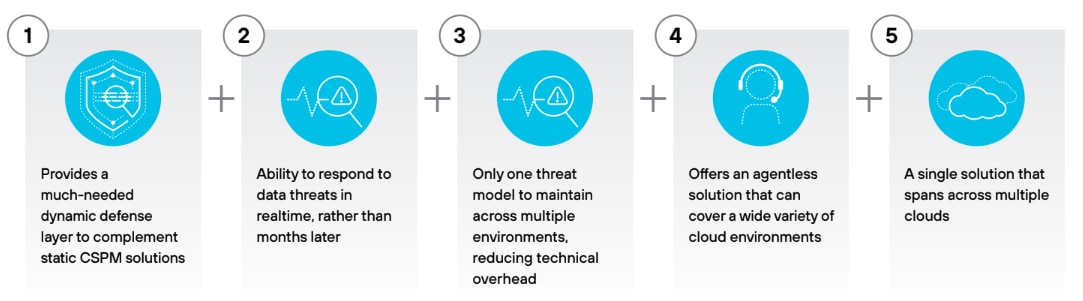

By leveraging advanced data analytics and automated response mechanisms, DDR enables organizations to fortify their cloud data security posture and mitigate the impact of security incidents. Via this dynamic defense layer, DDR complements the static security measures provided by cloud security posture management (CSPM) and data security posture management (DSPM) solutions, creating a holistic approach to protecting sensitive data across the enterprise.

Figure 1: Data-centric security and cloud-native application protection deliver a more complete and streamlined solution for security, data, and development teams.

Why Is DDR Important?

Data detection and response is essential in today's dynamic cybersecurity landscape, where the risks posed by data breaches have escalated to unprecedented levels. The 2024 Data Breach Investigations Report (DBIR) paints a concerning picture, revealing a staggering 10,626 confirmed data breaches — the highest number recorded to date.

Understanding the Breadth of the Data Breach Landscape

A significant 68% of the breaches analyzed in the DBIR involved a human element, underscoring the need for organizations to prioritize DDR solutions that can swiftly identify and respond to human errors. Compounding the challenge, the report highlights a dramatic 180% increase in breaches initiated through the exploitation of vulnerabilities compared to previous years.g.

Then there’s ransomware. No one thinks it will happen to them, yet ransomware and extortion techniques accounted for 32% of all breaches, again citing the 2024 DBIR. Implementing effective data detection and response strategies will help organizations quickly identify threats and mitigate their impact, safeguarding sensitive data and preserving the organization’s reputation and financial well-being.

A Closer Look at the Biggest Risks to Data

As mentioned above, several factors — including human error — put data at risk. Coupled with this are shadow data and data fragmentation, each of which introduces unique vulnerabilities with the potential to compromise the integrity, confidentiality, and availability of data.

Human Error: The Underlying Risk

Human error remains the most pervasive threat to data security. Whether through accidental deletion, mismanagement of sensitive information, weak password practices, or falling victim to phishing attacks, individuals often create unintended vulnerabilities. Even the most sophisticated security systems can be undermined by a single mistake, making human error a foundational risk that permeates all aspects of data security.

Shadow Data

One of the most concerning byproducts of human error is the proliferation of shadow data. The term refers to data that exists outside officially managed and secured systems, often stored in unsanctioned cloud services, on personal devices, or in forgotten backups.

Shadow data typically escapes the reach of regular security protocols, leaving it particularly vulnerable to breaches. Employees may inadvertently create or store this data in insecure locations, unaware of the risks their actions present. The hidden nature of shadow data creates a blind spot in the organization’s security strategy.

Data Fragmentation in the Multicloud Ecosystem

In a multicloud environment, data fragmentation is an inherent risk factor. As data is distributed across multiple cloud platforms, often with varying security standards and management practices, maintaining consistent protection becomes increasingly difficult. Fragmentation of course complicates the enforcement of uniform security policies and increases the attack surface — especially during data transfer between clouds. The lack of visibility and control over fragmented data further exacerbates the risk, making it challenging to track data movement, detect anomalies, or ensure regulatory compliance.

The Intersection of Risks

Data risk factors intersect as human error leads to the creation of shadow data, which in turn elevates risk when data is fragmented across a multicloud environment. Now consider that data is frequently in motion, moving from one location to another. These risks create a Bermuda triangle of sorts if not effectively protected.

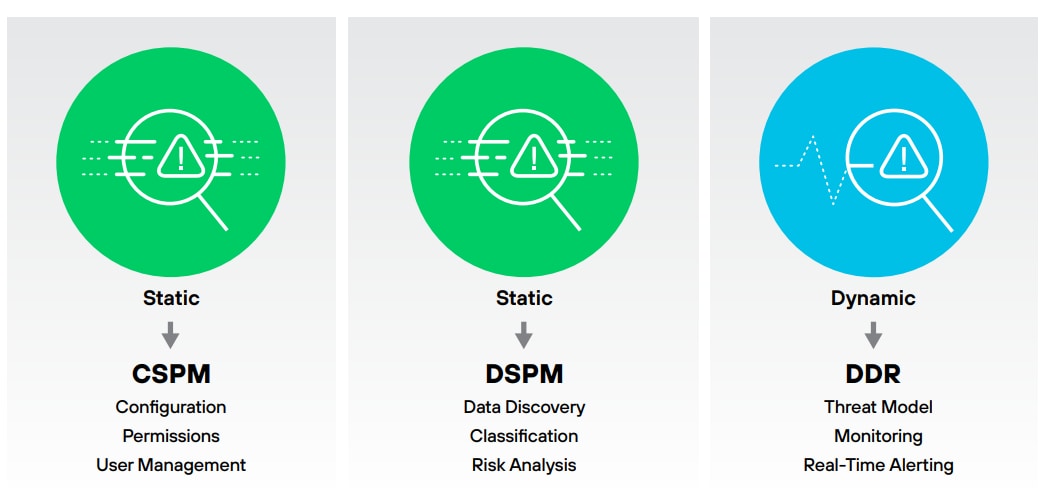

Improving DSPM Solutions with Dynamic Monitoring

The DSPM capabilities we’ve discussed to this point refer primarily to static risk — finding sensitive data, classifying it, and reviewing the access controls and configurations applied to it.

To maintain an effective data security posture, though, you need to continually monitor and analyze data access patterns and user behavior. Data detection and response does just that.

Figure 2: Protecting data in today’s multicloud environments

Data detection and response provides real-time monitoring and alerting capabilities to help security teams quickly detect and respond to potential threats and suspicious activities — while it prioritizes issues that put sensitive data at risk. By leveraging machine learning algorithms and advanced log analytics, DDR can identify anomalies in user behavior and access patterns that potentially indicate a compromised account or insider threat.

A Closer Look at Data Detection and Response (DDR)

DDR describes a set of technology-enabled solutions used to secure cloud data from exfiltration. It provides dynamic monitoring on top of the static defense layers provided by CSPM and DSPM tools.

With today's organizations storing data across various cloud environments — PaaS (e.g., Amazon RDS), IaaS (virtual machines running datastores), and DBaaS (e.g., Snowflake) — it isn’t feasible to monitor every data action. DDR solutions use real-time log analytics to monitor cloud environments that store data and detect data risks as soon as they occur.

How DDR Solutions Work

Data detection and response solutions incorporate DSPM capabilities to discover and classify data assets, identify risks such as unencrypted sensitive data or data sovereignty violations, and prioritize remediation by data owners or IT. Once sensitive data assets are mapped, DDR solutions monitor activity through cloud-native logging available in public clouds, generating event logs for every query or read request.

Figure 3: Addressing real-time threats and configuration-based issues with DSPM and DDR

The DDR tool analyzes logs in near real-time, applying a threat model to detect suspicious activity, such as data flowing to external accounts. Upon identifying new risks, DDR issues alerts and suggests immediate actions. These alerts are often integrated into SOC or SOAR (security orchestration, automation, and response) solutions for faster resolution and seamless alignment with existing operations.

DDR Use Cases

To envision the types of incidents a DDR solution addresses, consider a few examples seen among our users.

Data Sovereignty Issues

Legislation from recent years creates obligations to store data in specific geographical areas (such as the EU or California). DDR helps detect when data flows to an unauthorized physical location, preventing compliance issues down the line.

Assets Moved to Unencrypted/Unsecure Storage

As data flows between databases and cloud storage, it can make its way to an insecure datastore (often the result of a temporary but forgotten workaround). DDR alerts security teams to this type of movement.

Snapshots and Shadow Backups

Teams face increasing pressure to do more with data, leading to the prevalence of shadow analytics outside approved workflows. DDR helps find copies of data stored or shared in ways that may cause breaches.

Related Article: CNAPP, DSPM and DDR: A New Age in Cloud Security

How Does DDR Fit into the Cloud Data Security Landscape?

DDR Vs. CSPM and DSPM

Cloud Security Posture Management

CSPM is about protecting the posture of the cloud infrastructure (such as overly generous permissioning or misconfiguration). It doesn’t directly address data — its context and how it flows across different cloud services.

Data Security Posture Management

DSPM protects data from the inside out. By scanning and analyzing stored data, DSPM tools identify sensitive information such as PII or access codes, classify the data, and evaluate its associated risk. This process provides security teams with a clearer picture of data risk and data flow, enabling them to prioritize cloud assets where a breach could cause the most damage.

While DSPM offers more granular cloud data protection, both CSPM and DSPM are static and focused on posture. They allow organizations to understand where risk lies but offer little in terms of real-time incident response.

In contrast, DDR is dynamic. It focuses on data events happening in real time, sending alerts, and giving security teams a chance to intervene and prevent significant damage. DDR monitors the specific event level, whereas other solutions look at configurations and data at rest.

A Potential Situation

Consider a scenario where an employee has authorized, role-based access to a database containing customer data. The employee plans to leave the company and, before notifying their managers of their intention to leave, copies the database to their personal laptop to take to the next company.

In this example, permissions allow the employee to access the database — and yet, a major data exfiltration event is unfolding. A DDR solution with a well-calibrated threat model detects the unusual batch of data (and other irregularities) contained in this export. The DDR tool sends an alert to the security team and provides full forensics — pinpointing the exact asset and actor involved in the exfiltration. Saving critical time, the security team intervenes before the insider threat achieves its goals.

Does the CISO Agenda Need an Additional Cybersecurity Tool?

DDR provides mission-critical functionality missing from the existing cloud security stack. When agents aren't feasible, you need to monitor every activity that concerns your data. DDR protects your data from exfiltration or misuse, as well as from compliance violations. By integrating with SIEM and SOAR solutions, enabling teams to consume alerts in one place, DDR helps reduce operational overhead.

Figure 4: Benefits of data detection and response

The Need for Agentless DLP

Monitoring data assets in real time might seem obvious, but organizations, for the most part, lack an adequate way to protect sensitive data. In the traditional, on-premises world, work was mainly done on personal computers connected via an intranet to a server. Security teams monitored traffic and activity by installing agents (software components such as antivirus tools) on every device and endpoint that had access to organizational data.

But you can’t install an agent on a database hosted by Amazon or Google or place a proxy in front of thousands of datastores. The move to cloud infrastructure requires new approaches to data loss prevention (DLP).

The industry gravitated toward static solutions geared toward improving the security posture of cloud datastores (CSPM, DSPM) by detecting misconfigurations and exposed data assets. But the challenge with data flow hadn’t been addressed until DDR.

When Static Defense Layers Aren't Enough: Lessons from a Breach

The 2018 Imperva breach began with an attacker gaining access to a snapshot of an Amazon RDS database containing sensitive data. The attacker used an AWS API key stolen from a publicly accessible, misconfigured compute instance.

Would CSPM and DSPM have prevented the breach?

A CSPM solution could identify the misconfiguration, and DSPM could detect sensitive data stored on the misconfigured instance. Neither tool, though, would have been able to identify the unusual behavior once the attacker had gained access that appeared legitimate.

And as it unfolded in 2018, the Imperva breach wasn’t discovered for 10 months, via a third party. The attacker had exported the database snapshot to an unknown device — and, all the while, the unaware company couldn't notify its users that their sensitive data had been leaked.

A DDR solution would have addressed the gap by monitoring the AWS account at the event log level. Potentially identifying the attack in real time, it would have alerted internal security teams, allowing them to respond immediately.

Supporting Innovation Without Sacrificing Security

The cloud is here to stay, as are microservices and containers. As cybersecurity professionals, we can’t prevent the organization from adopting technologies that accelerate innovation and give developers more flexibility. But we need to do everything we can to prevent data breach.

DSPM with DDR offers critical capabilities previously missing in the cloud security landscape — data discovery, classification, static risk management, and continuous and dynamic monitoring of complex, multicloud environments. Providing organizations with the visibility and control necessary to effectively manage their data security posture enables organizations to catch incidents earlier, averting or minimizing disastrous data loss.

DSPM and Data Detection and Response FAQs

Securing multicloud architectures involves managing security across multiple cloud service providers, each with unique configurations, policies, and compliance requirements.

Consistent security policies and controls must be implemented across all environments to prevent misconfigurations. Data encryption, access controls, and identity management become more complex due to disparate systems. Monitoring and incident response require integration across different platforms, complicating visibility and coordination. Ensuring compliance with regulatory requirements across multiple jurisdictions adds another layer of complexity.

Overcoming these challenges demands a comprehensive, unified security strategy and robust automation tools.

A data inventory is a comprehensive list of all the data assets that an organization has and where they're located. It helps organizations understand and track:

- Types of data they collect, store, and process;

- Sources, purposes, and recipients of that data.

Data inventories can be managed manually or automatically. The reasons for maintaining a data inventory vary — and could include data governance, data management, data protection, data security, and data compliance.

For example, having a data inventory can help organizations identify and classify sensitive data, assess the risks associated with different types of data, and implement appropriate controls to protect that data. It can also help organizations understand which data they have available to support business objectives, or to generate specific types of analytics reports.

Data in use refers to data that is actively stored in computer memory, such as RAM, CPU caches, or CPU registers. It’s not passively stored in a stable destination but moving through various systems, each of which could be vulnerable to attacks. Data in use can be a target for exfiltration attempts as it might contain sensitive information such as PCI or PII data.

To protect data in use, organizations can use encryption techniques such as end-to-end encryption (E2EE) and hardware-based approaches such as confidential computing. On the policy level, organizations should implement user authentication and authorization controls, review user permissions, and monitor file events.