- 1. How Denial-of-Service Attacks Work

- 2. Denial-of-Service in Adversary Campaigns

- 3. Real-World Denial-of-Service Attacks

- 4. Detection and Indicators of Denial-of-Service Attacks

- 5. Prevention and Mitigation of Denial-of-Service Attacks

- 6. Response and Recovery from Denial-of-Service Attacks

- 7. Operationalizing Denial-of-Service Defense

- 8. DoS Attack FAQs

- How Denial-of-Service Attacks Work

- Denial-of-Service in Adversary Campaigns

- Real-World Denial-of-Service Attacks

- Detection and Indicators of Denial-of-Service Attacks

- Prevention and Mitigation of Denial-of-Service Attacks

- Response and Recovery from Denial-of-Service Attacks

- Operationalizing Denial-of-Service Defense

- DoS Attack FAQs

What Is a Denial of Service (DoS) Attack?

- How Denial-of-Service Attacks Work

- Denial-of-Service in Adversary Campaigns

- Real-World Denial-of-Service Attacks

- Detection and Indicators of Denial-of-Service Attacks

- Prevention and Mitigation of Denial-of-Service Attacks

- Response and Recovery from Denial-of-Service Attacks

- Operationalizing Denial-of-Service Defense

- DoS Attack FAQs

A denial-of-service (DoS) attack is a cyber attack that inundates a system, application, or network with excessive traffic or resource requests, rendering it unavailable to legitimate users and disrupting operations, often without breaching security perimeters.

DoS Attack Explained

A denial-of-service (DoS) attack deliberately exhausts the availability of a system, service, or network resource by flooding it with requests or exploiting operational bottlenecks. The objective is to degrade or disrupt service delivery to legitimate users — and to do so without requiring access to internal systems or data.

MITRE ATT&CK framework classifies denial of service under multiple techniques, most notably under T1499: Endpoint Denial of Service, T1498: Network Denial of Service, and T1499.001: OS Exhaustion Flood. These cover attacks at both infrastructure and application layers, including volumetric floods, protocol abuse, and resource starvation.

Related Terminology and Forms

DoS encompasses multiple subtypes. A distributed denial-of-service (DDoS) attack leverages botnets or multiple compromised systems to amplify scale and redundancy. Application-layer DoS targets specific features or endpoints to exhaust memory, thread pools, or compute cycles with low-volume but high-impact requests.

SYN floods, HTTP floods, UDP amplification, IP fragmentation, and slowloris attacks are all distinct forms of DoS, each abusing a different network or protocol behavior.

Modern Variants and Target Shifts

Early DoS attacks aimed at bandwidth saturation. Modern variants prioritize economic and operational disruption. Attackers increasingly target API endpoints, cloud service limits, or WAF configurations. Cloud-native applications face new risks from event-driven architectures and autoscaling thresholds, where DoS can trigger cascading failures or inflated cloud costs.

Attackers also deploy multivector DoS campaigns, combining volumetric, protocol, and application-layer tactics to evade detection and amplify effectiveness. Many campaigns serve as decoys to distract responders from lateral movement or data exfiltration activities in parallel.

Denial of service, once considered noisy and unsophisticated, now functions as a precision weapon in ransomware extortion, geopolitical disruption, and nation-state signaling. Its evolution reflects the shifting nature of operational risk in highly distributed, API-driven environments.

How Denial-of-Service Attacks Work

DoS attacks aim to overwhelm a target by consuming finite resources. The target may be a web server, network device, application endpoint, or cloud-based service. Attacks either flood the system with excessive traffic or exploit protocol logic to trigger failure states. In either case, the outcome is degraded or halted availability.

A typical attack begins with target reconnaissance, identifying exposed services, bandwidth limitations, session handling behavior, or rate enforcement gaps. Once mapped, attackers select a strategy: volumetric, protocol, or application-layer disruption. Each vector has different characteristics and defensive implications.

Volumetric DoS and Amplification Tactics

Volumetric attacks saturate bandwidth with junk traffic. Reflection techniques like DNS, NTP, or CLDAP amplification are frequently used, where attackers spoof a victim's IP as the source of a small query that elicits a much larger response. Open resolvers and poorly configured UDP services enable this scale.

UDP flood attacks require no session handshake. They can be launched from spoofed IPs using tools such as hping3, Low Orbit Ion Cannon (LOIC), or custom Python scripts. When launched from botnets, these floods can exceed hundreds of Gbps, overwhelming network interfaces, firewalls, or transit links.

Protocol-Level Abuse

Protocol attacks manipulate stateful behavior in TCP/IP, HTTP, or SSL/TLS layers. A SYN flood, for example, exploits the TCP handshake by sending a large volume of SYN packets without completing the connection. The server allocates resources for each half-open session until capacity is exhausted.

In HTTP/2 environments, attackers abuse the multiplexing layer to send overlapping streams or craft malformed headers that bypass traditional rate filters. TLS renegotiation and session resumption mechanisms also become attack surfaces in poorly optimized configurations.

Application-Layer Disruption

Application-layer attacks are stealthier and more resource-efficient. They mimic legitimate requests but are designed to consume server resources disproportionate to their size. A common technique is a slow POST, where an attacker opens a connection and trickles in the body content byte-by-byte, preventing the server from freeing resources.

In REST and GraphQL APIs, attackers generate high-cardinality queries, over-fetch nested data, or hit endpoints with high CPU cost per request. These techniques require fewer requests to degrade performance and often bypass volumetric detection.

Cloud-Specific Weaknesses

Cloud-native applications expose unique failure conditions. Autoscaling groups may scale out under attack, causing cost explosions. Lambda-based serverless architectures can become vulnerable to concurrency exhaustion. API gateways and load balancers may be overloaded before application backends are reached.

Threat actors also target cloud-specific throttling limits, such as burst quotas, memory ceilings, or request-per-second thresholds. Some campaigns aim to force a service degradation that triggers failover or alerts, distracting responders from simultaneous intrusions.

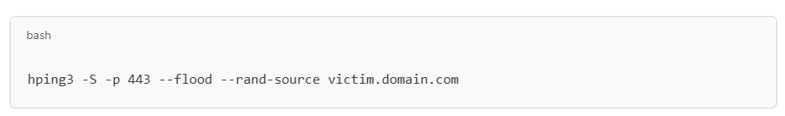

Figure 1: SYN flood with spoofed packets

The command in figure 1 sends a continuous stream of SYN packets with randomized source IPs to port 443, consuming the server's connection queue. It's stateless and difficult to block without deep inspection or behavioral rate-limiting.

DoS attacks vary in volume, sophistication, and intent. Some seek disruption. Others act as diversions. A growing number exploit business logic and infrastructure dependencies, turning availability into a calculated attack surface.

Denial-of-Service in Adversary Campaigns

Denial-of-service attacks rarely function in isolation. In targeted operations, adversaries integrate DoS techniques into multiphase kill chains designed to distract, delay, or degrade response during more consequential activity. DoS becomes a supporting mechanism — tactical in timing, strategic in outcome.

Tactical Use for Distraction and Diversion

Attackers often deploy DoS to mask intrusion. While defenders focus on restoring service availability, adversaries exploit the disruption to move laterally, escalate privileges, or exfiltrate data. For example, a burst of HTTP floods may coincide with credential brute force attempts, diluting telemetry visibility or delaying SOC triage.

Some threat actors use volumetric floods to target logging infrastructure or APIs that feed SIEM and XDR pipelines. Overloading telemetry channels can break correlations, disable alerts, or create windows of unmonitored access. This approach allows stealthier techniques — such as token theft or privilege misuse — to unfold with less scrutiny.

Staged DoS as Precursor or Follow-Up

DoS can serve as a prelude to ransomware deployment. By disrupting remote backups or isolating incident responders, attackers limit remediation options before triggering encryption. In double extortion campaigns, a follow-on DoS attack may pressure victims during ransom negotiations or enforcement of payment deadlines.

Some groups weaponize DoS post-exfiltration. Once data has been stolen, they launch availability attacks to inflict additional harm, disrupt recovery, or assert leverage in high-profile extortion campaigns. In these cases, the attack chain involves a full compromise followed by denial.

Kill Chain Positioning

- Reconnaissance: Attackers identify weak endpoints, exposed APIs, or cloud service thresholds. Misconfigured DDoS protection or unmonitored ports are key indicators for DoS viability.

- Initial Access: DoS may not provide access itself but is often coordinated with phishing or credential stuffing to degrade visibility or response.

- Execution: Application-layer DoS is often executed alongside living-off-the-land tactics, especially when endpoint detection must be overwhelmed.

- Exfiltration: DoS floods may saturate logs or outbound filtering systems, reducing evidence of large data transfers or covert channels.

- Impact: The DoS event, if timed after primary exploitation, serves as an accelerant to reputational damage or operational disruption.

Dependencies and Connective Techniques

Denial of service depends on exploitable asymmetries. Whether resource exhaustion, protocol behavior, or cloud misconfiguration, the attack works best when minimal input creates disproportionate output. Attackers amplify effect by combining DoS with:

- Credential-based attacks to disrupt MFA infrastructure or identity verification processes

- Phishing or pretexting to time DoS during critical response actions

- CSRF or session fixation to exploit user reauthentication flows degraded by DoS pressure

- DNS manipulation to redirect legitimate traffic into unmonitored or malicious paths

Denial of service isn’t just noise — it’s an adaptable tool in complex operations. Whether used to blind, delay, or coerce, its role in modern campaigns reflects a growing understanding of how infrastructure fragility shapes cybersecurity outcomes.

Real-World Denial-of-Service Attacks

Denial-of-service attacks have escalated in scale, targeting industries with high availability requirements and limited operational tolerance for disruption. Below are select examples that illustrate different motivations, methodologies, and consequences across verticals.

Cloud Provider Disruption: Google Cloud, AWS, and Azure (2023–2024)

In mid-2023, Google Cloud reported that it mitigated the largest known Layer 7 (application layer) DDoS attack, peaking at 398 million requests per second. The attack leveraged a novel HTTP/2 vulnerability that enabled request multiplexing abuse, significantly amplifying load per connection. Within weeks, Microsoft and AWS reported similar campaigns against their infrastructure. Attackers exploited the same protocol-level flaw to target APIs and front-end services.

These events revealed widespread reliance on shared transport and protocol stacks across major providers. The attack didn’t breach data, but it triggered global rate limiting, auto-scaling failures, and service degradation in customer environments. It also forced emergency patches across CDNs, proxies, and web servers running HTTP/2.

Healthcare Disruption: Denmark’s Hospitals (2023)

In May 2023, a DDoS campaign targeting the Danish healthcare system temporarily disrupted access to emergency booking systems and delayed treatment workflows across multiple hospitals. The attack originated from compromised IoT devices and focused on saturating inbound network bandwidth through volumetric floods.

While no patient data was exposed, hospital administrators reported operational delays, appointment cancellations, and a ripple effect on dependent digital services. Healthcare regulators flagged the event as a failure in regional incident coordination and response preparedness.

Financial Sector Targeting: HSBC (2023)

HSBC confirmed a DDoS attack in November 2023 that disrupted mobile banking and customer web access. While the bank maintained transactional integrity, customers experienced login timeouts and intermittent service unavailability. Attack telemetry pointed to a botnet distributed across residential proxies and abused API endpoints not previously load tested under attack conditions.

The incident exposed a critical business risk: customer churn due to perceived unreliability. Financial institutions operate in a zero-tolerance environment for latency, making them prime targets for precision DoS at the application and identity verification layers.

Hacktivist-Driven Attacks: NoName057(16) Campaigns (2023–2024)

The pro-Russian hacktivist group NoName057(16) launched repeated DDoS attacks against government, financial, and transport websites in Europe and North America throughout 2023 and into 2024. Using open-source tooling and Telegram-based coordination, the group maintained an ongoing campaign of public defacements and takedowns, targeting countries perceived as hostile to Russian interests.

Unlike financially motivated campaigns, these attacks focused on high-visibility disruption rather than persistence. Targets included Poland’s parliament portal, Canada’s tax authority, and U.S. city websites. The attacks, while low sophistication, were effective due to consistent retargeting and exploitation of underprotected infrastructure.

Industry Trends and Frequency

According to Cloudflare's 2024 report, application-layer DDoS attacks increased by 79% year-over-year, with API endpoints and authentication services among the most targeted. Sectors with high digital reliance — banking, SaaS, e-commerce, and healthcare — continue to see sustained targeting, often by opportunistic groups repurposing older vulnerabilities with new traffic amplification vectors.

DDoS has become a multipurpose weapon: disruption for extortion, distraction during breaches, coercion during negotiations, or simple ideological signaling. Attackers choose targets by technical exposure, but operational dependence on uptime and public reputation also add to the allure.

Detection and Indicators of Denial-of-Service Attacks

DoS attacks leave distinct traces across network, application, and system layers. Effective detection requires correlation between traffic telemetry, behavioral anomalies, and system performance metrics. The challenge lies in distinguishing malicious traffic from legitimate spikes caused by load tests, promotions, or onboarding surges.

Network and Protocol-Level Indicators

At the network layer, volumetric attacks produce sudden traffic spikes from a broad range of IP addresses, often with spoofed origins. Tools like NetFlow, sFlow, or VPC Flow Logs can reveal surges in inbound UDP or TCP SYN packets with low connection completion rates.

Patterns such as asymmetric request/response ratios, excessive small-packet floods, or malformed headers in DNS, NTP, or HTTP packets can signal reflection or amplification campaigns. Many DDoS mitigation systems rely on entropy analysis of source IPs and TTL values to flag spoofed traffic.

Application and API Layer Patterns

Application-layer DoS presents as persistent requests targeting specific endpoints, often with valid headers but abnormal frequency or structure. Attackers may call resource-intensive routes — like search, reporting, or export APIs — with randomized parameters to avoid caching.

Look for anomalies such as:

- Sudden increase in POST or GET requests to low-traffic endpoints

- Long-lived connections with minimal data transfer (e.g., slow POST)

- Requests with excessive nesting or query depth in GraphQL or REST APIs

- Repeated bursts of requests with short user-agent strings or inconsistent language headers

Authentication endpoints also become hot spots for credential-based floods or session exhaustion, often preceding or accompanying volumetric activity.

System-Level Effects and Resource Saturation

On the target system, DoS attacks manifest as rapidly escalating CPU usage, memory allocation failures, or thread pool starvation. Web servers may hit connection limits, queue backlogs, or timeout thresholds. Load balancers and WAFs may log increased 503, 429, or 504 errors.

Logging systems under duress may begin dropping events, introducing blind spots just as visibility becomes critical. Monitoring tools should alert on deviations in request-per-second (RPS), error rate, and backend latency — particularly during off-peak hours or non-release windows.

Key Indicators of Compromise (IOCs)

- IP patterns: High-volume requests from spoofed or dynamically rotating IPs

- Header anomalies: Missing or forged headers, unusual content-length values, or malformed HTTP/2 frames

- Request rate spikes: Sudden, sustained surges in requests per second to single endpoints or services

- Port saturation: Unusual levels of inbound traffic on UDP/TCP 53, 123, 389, or 443

- Payloads: Repeated malformed requests crafted to trigger buffer overflows or resource locks

Monitoring Recommendations for SIEM/XDR Integration

- Correlate firewall, load balancer, and web server logs to detect asymmetry between inbound and outbound flows

- Set dynamic thresholds for API RPS, request complexity, and concurrent connections per IP

- Monitor authentication logs for abnormal failure rates or login spikes tied to DoS timing

- In cloud environments, track auto-scaling events and cost anomalies as early indicators of service saturation

- Alert on drops in log volume or telemetry throughput, which may indicate monitoring suppression under load

Denial of service is noisy by nature, but that noise often hides precision. Effective detection depends on layered observability across transport, logic, and behavior — not just traffic volume.

Prevention and Mitigation of Denial-of-Service Attacks

Preventing denial-of-service attacks requires controls that limit resource abuse, reduce exposure, and shift failure gracefully. Many mitigations fail not because of missing tools, but because configurations don’t match the architecture’s actual risk surface.

Architectural Design and Infrastructure Resilience

Resilient systems absorb impact by design. Avoid tight coupling between frontend services and critical infrastructure. Introduce buffering layers — such as queues, caches, or circuit breakers — between public endpoints and core workloads. Use fail-open or fail-fast policies to avoid cascading failures under load.

Cloud-native defenses must be embedded at the edge. Deploy reverse proxies or API gateways with aggressive timeouts, payload inspection, and retry suppression. Position autoscaling policies behind explicit rate constraints to prevent runaway expansion or cost shock during sustained floods.

Rate Limiting and Adaptive Throttling

Enforce per-IP and per-user rate limits at the application gateway, not at the app server. Burst ceilings and token-bucket algorithms work best when tuned against production baselines. High cardinality endpoints should include concurrency controls to restrict expensive operations, such as PDF generation or large data fetches.

Use adaptive throttling to lower limits in real time during an attack, not just fixed ceilings. Some CDNs and API management layers support behavior-aware rate limiting, where IP reputation or anomaly scores inform throttle thresholds.

Network Rules and Segmentation

Block known amplification vectors — DNS, NTP, SSDP, CLDAP — at the edge unless explicitly required. Ingress filtering (BCP 38) helps prevent spoofed packets from participating in reflection attacks. For internal workloads, segment services to limit lateral exhaustion risks, and route traffic through rate-aware firewalls.

Set strict controls on publicly exposed services. For example, avoid exposing full GraphQL schemas or admin endpoints without IP allowlisting or API tokens. If application backends must remain open, enforce reverse-path filtering and protocol verification to reject malformed payloads.

IAM Controls and Bot Defense

Ensure bots and service accounts cannot abuse APIs without verification. Require strong identity assertions from automated users, such as signed tokens or mTLS. IAM misconfiguration allows bot frameworks to flood privileged APIs from inside trusted networks.

Implement CAPTCHA or behavioral challenge systems on forms, authentication flows, and support portals. Rotate endpoint paths and throttle unauthenticated users more aggressively. Use device fingerprinting and request integrity tokens to invalidate repeated sessionless requests.

What Doesn't Work

Overreliance on volumetric thresholds alone is ineffective. Many application-layer DoS attacks stay under traditional detection limits. Similarly, relying solely on autoscaling as protection against high request volume leads to cost overflow without addressing the root cause.

WAFs alone won’t stop Layer 7 DoS unless paired with real traffic intelligence. Blocklists and static rules miss randomized payloads and protocol abuse. Without behavior modeling, they quickly fall out of sync with attacker techniques.

Training and incident playbooks often neglect DoS scenarios, treating them as availability issues rather than coordinated threats. Teams must be prepared to triage DoS events as active intrusions, not just service outages.

Successful mitigation combines distributed enforcement, protocol awareness, identity validation, and tight coupling between infrastructure and security telemetry. Without that integration, defenses remain reactive and fragmented.

Response and Recovery from Denial-of-Service Attacks

A well-coordinated response to a denial-of-service attack must begin before the first packet hits the perimeter. Real-time containment and long-term resilience depend on preparation, precision, and cross-functional clarity under pressure.

Immediate Containment Actions

The first task is to isolate the source and reduce impact. If the attack targets a specific IP or service, reroute traffic through a DDoS mitigation provider or activate upstream scrubbing services. Dynamic DNS adjustments can redirect users to backup endpoints, decoupling production APIs or portals from the overloaded infrastructure.

Rate limits, geo-blocking, and ACL adjustments should be applied quickly to filter malicious traffic at the edge. If application-layer attacks are in play, disable or throttle impacted routes. Use emergency caching rules to serve static content and offload dynamic computation where possible.

If the service is customer-facing, activate predefined communication protocols immediately. Provide transparent updates on status pages, incident portals, or public feeds to reduce inbound noise and preserve customer trust.

Key Response Teams and Tools

An effective DoS response involves multiple units. The network team should work with upstream providers and DDoS mitigation vendors to enforce blocking and redirection. The application team must trace impacted endpoints and isolate resource-intensive calls or plugins. Security operations must correlate with SIEM data to detect any concurrent threat activity.

Include DevOps in failover and rollback decisions. Legal and communications teams must be looped in early if customer access, SLAs, or regulatory exposure is at risk. If extortion is involved, engage executive and legal counsel immediately and consider law enforcement notification through channels such as IC3 or CISA.

SIEM and XDR platforms should be used to detect deviation from baseline behavior and assess whether the DoS is a diversion for deeper compromise.

Recovery and Post-Mortem

Once stability is restored, conduct a detailed post-mortem to identify failure points across infrastructure, application logic, and communications. Review logs for anomalies during the attack window. Determine whether telemetry, visibility, or alerting degraded under pressure.

Update rate limits, WAF rules, timeout policies, and scaling configurations based on observed behavior. Evaluate whether logging infrastructure or incident workflows themselves contributed to downtime. If the attack revealed any IAM weaknesses or exposed admin surfaces, prioritize remediation and secret rotation.

Debrief all involved teams and update the runbook. Include scenarios for application-layer DoS, protocol-level floods, and cloud-specific saturation patterns. Incorporate lessons into tabletop exercises and automate known-good responses into the CI/CD pipeline wherever feasible.

Denial-of-service events test not just technical defenses, but organizational reflexes. The most resilient teams treat them as rehearsals for more complex failures — and build muscle memory to move from disruption to containment without delay.

Operationalizing Denial-of-Service Defense

The most effective denial-of-service protections emerge from operational discipline across teams, systems, and suppliers. Organizations that treat DoS as an engineering and coordination problem — not just a network anomaly — are better positioned to absorb, adapt, and recover with minimal impact.

Map Critical Services and Failure Domains

Not all services need the same level of protection. Begin by mapping your public- and partner-facing interfaces, APIs, and integrations that are essential to core business functions. For each, define acceptable downtime, recovery point objectives, and dependencies across DNS, identity, cloud infrastructure, and telemetry.

Use this analysis to identify choke points — places where a single DoS vector could cascade into broader outages or trigger expensive autoscaling events. Document which controls are in place, which are reactive, and which remain assumptions.

Embed DoS Scenarios in Incident Playbooks

DoS scenarios are rarely included in traditional incident response runbooks. Most workflows emphasize data compromise or malware containment. Add specific tracks for volumetric and application-layer events, including DNS rerouting, traffic diversion, and customer communication triggers.

Simulate real-world events using chaos testing or red team drills. Test response speed not only across security but also legal, public relations, infrastructure, and support. Effective DoS mitigation depends as much on decisive internal routing as on packet filtering.

Coordinate with External Providers

Work with upstream ISPs, CDN providers, and DDoS mitigation partners before the emergency. Ensure your teams know which contacts to engage, what thresholds trigger intervention, and what contractual support (e.g., bandwidth caps, rate exemptions) is available under sustained cyber attack.

For cloud environments, verify that mitigation tools are tuned to your architecture. Services like AWS Shield Advanced or Cloudflare Magic Transit require configuration alignment to be effective.

Measure What Matters

Track leading indicators of resilience:

- Percentage of public endpoints behind traffic scrubbing or rate enforcement

- Time to reroute or block a targeted endpoint

- Error budgets consumed during peak attacks

- False-positive impact on legitimate user traffic

These metrics shift DoS protection from reactive patchwork to operational posture.

Revisit Assumptions After Each Event

Every denial of service is an opportunity to discover misaligned thresholds, brittle dependencies, or missed telemetry. Feed each event’s data into architectural reviews. Treat every failed autoscaling, exhausted thread pool, or overloaded load balancer as a signal.

Sustained resilience doesn’t mean you never get hit. It means you’re capable of absorbing attacks without turning a temporary disruption into a systemic outage. But resilience requires coordinated, practiced, and continuously hardened operations.