- What Is Artificial Intelligence (AI)?

-

What Are the Predictions of AI In Cybersecurity?

- Predictions of AI in Cybersecurity Explained

- The New Cyber Arms Race: AI as an Offensive Force Multiplier

- Autonomous Defense: Predictions for Security Operations

- New Attack Surfaces and Governance Challenges

- The Future of the Security Workforce and AI

- Industry-Specific AI Applications and Case Studies

- Historical Context and AI Evolution

- Predictions of AI in Cybersecurity FAQs

-

MITRE's Sensible Regulatory Framework for AI Security

- MITRE's Sensible Regulatory Framework for AI Security Explained

- Risk-Based Regulation and Sensible Policy Design

- Collaborative Efforts in Shaping AI Security Regulations

- Introducing the ATLAS Matrix: A Tool for AI Threat Identification

- MITRE's Comprehensive Approach to AI Security Risk Management

- MITRE's Sensible Regulatory Framework for AI Security FAQs

- NIST AI Risk Management Framework (AI RMF)

- What Is Machine Learning (ML)?

- What Are Large Language Models (LLMs)?

- IEEE Ethically Aligned Design

-

Top GenAI Security Challenges: Risks, Issues, & Solutions

- Why is GenAI security important?

- Prompt injection attacks

- AI system and infrastructure security

- Insecure AI generated code

- Data poisoning

- AI supply chain vulnerabilities

- AI-generated content integrity risks

- Shadow AI

- Sensitive data disclosure or leakage

- Access and authentication exploits

- Model drift and performance degradation

- Governance and compliance issues

- Algorithmic transparency and explainability

- GenAI security risks, threats, and challenges FAQs

- Google's Secure AI Framework (SAIF)

- What Is Explainable AI (XAI)?

- What Is Explainability?

-

AI Risk Management Framework

- AI Risk Management Framework Explained

- Risks Associated with AI

- Key Elements of AI Risk Management Frameworks

- Major AI Risk Management Frameworks

- Comparison of Risk Frameworks

- Challenges Implementing the AI Risk Management Framework

- Integrated AI Risk Management

- The AI Risk Management Framework: Case Studies

- AI Risk Management Framework FAQs

- What Is an AI Worm?

-

AI Concepts DevOps and SecOps Need to Know

- Foundational AI and ML Concepts and Their Impact on Security

- Learning and Adaptation Techniques

- Decision-Making Frameworks

- Logic and Reasoning

- Perception and Cognition

- Probabilistic and Statistical Methods

- Neural Networks and Deep Learning

- Optimization and Evolutionary Computation

- Information Processing

- Advanced AI Technologies

- Evaluating and Maximizing Information Value

- AI Security Posture Management (AI-SPM)

- AI-SPM: Security Designed for Modern AI Use Cases

- Artificial Intelligence & Machine Learning Concepts FAQs

- What Is AI Governance?

- What Is the AI Development Lifecycle?

-

Why You Need Static Analysis, Dynamic Analysis, and Machine Learning?

-

Why Does Machine Learning Matter in Cybersecurity?

-

What is an ML-Powered NGFW?

-

10 Things to Know About Machine Learning

-

What Is the Role of AI in Threat Detection?

- Why is AI Important in Modern Threat Detection?

- The Evolution of Threat Detection

- AI Capabilities to Fortify Cybersecurity Defenses

- Core Concepts of AI in Threat Detection

- Threat Detection Implementation Strategies

- Specific Applications of AI in Threat Detection

- AI Challenges and Ethical Considerations

- Future Trends and Developments for AI in Threat Detection

- AI in Threat Detection FAQs

- What Is Precision AI™?

- What is the Role of AI in Endpoint Security?

-

What Is the Role of AI in Security Automation?

- The Role and Impact of AI in Cybersecurity

- Benefits of AI in Security Automation

- AI-Driven Security Tools and Technologies

- Evolution of Security Automation with Artificial Intelligence

- Challenges and Limitations of AI in Cybersecurity

- The Future of AI in Security Automation

- Artificial Intelligence in Security Automation FAQs

- What Is Generative AI in Cybersecurity?

-

What Are the Risks and Benefits of Artificial Intelligence (AI) in Cybersecurity?

- Understanding the Dual Nature of AI in Cybersecurity

- Traditional Cybersecurity vs. AI-Enhanced Cybersecurity

- Benefits of AI in Cybersecurity

- Risks and Challenges of AI in Cybersecurity

- Mitigating Risks and Maximizing Benefits: Strategic Implementation

- The Future Outlook: Adapting to the Evolving AI Landscape

- Risk and Benefits of AI in Cybersecurity FAQs

-

What Is the Role of AI and ML in Modern SIEM Solutions?

- The Evolution of SIEM Systems

- Benefits of Leveraging AI and ML in SIEM Systems

- SIEM Features and Functionality that Leverage AI and ML

- AI Techniques and ML Algorithms that Support Next-Gen SIEM Solutions

- Predictions for Future Uses of AI and ML in SIEM Solutions

- Role of AI and Machine Learning in SIEM FAQs

- What Is Inline Deep Learning?

- What is the role of AIOps in Digital Experience Monitoring (DEM)?

- AIOps Use Cases: How AIOps Helps IT Teams?

- What Are the Barriers to AI Adoption in Cybersecurity?

-

What Are the Steps to Successful AI Adoption in Cybersecurity?

- The Importance of AI Adoption in Cybersecurity

- Challenges of AI Adoption in Cybersecurity

- Strategic Planning for AI Adoption

- Steps Toward Successful AI Adoption

- Evaluating and Selecting AI Solutions

- Operationalizing AI in Cybersecurity

- Ethical Considerations and Compliance

- Future Trends and Continuous Learning

- Steps to Successful AI Adoption in Cybersecurity FAQs

What Is AI Security?

AI security encompasses the practices, technologies, and policies designed to protect artificial intelligence systems from unauthorized access, tampering, and malicious attacks. As a multidisciplinary field, AI security requires collaboration among experts in machine learning, cybersecurity, software engineering, ethics, and various application domains. Organizations must implement AI security to mitigate risks that could result in exploitation and data breaches.

AI Security Explained

AI security, or artificial intelligence security, is the protection of AI systems from cyberattacks. With AI systems increasingly used across all sectors of industry — including high-risk industries such as healthcare, finance, and transportation — AI models and systems have become targets for cyberattackers.

A rapidly evolving field, AI security focuses on identifying, assessing, and mitigating potential risks and vulnerabilities associated with artificial intelligence (AI) systems. To prevent undesirable outcomes and malicious misuse, ensuring the security and safety of AI systems is paramount, particularly as AI technologies become more advanced and widely adopted.

AI Systems & Security Risks

AI systems are composed of algorithms and vast datasets, which inherently make them vulnerable to risks. Key components of AI systems include:

- Data Layer: The data layer refers to the datasets used for training and testing the AI. This data can come from various sources and is often preprocessed and cleaned before use.

- Algorithm Layer: Machine learning algorithms are applied to learn from the data on this layer. Techniques involved include regression, classification, clustering, or neural networks in the case of deep learning.

- Computation Layer: This layer refers to the hardware and software infrastructure that runs the AI algorithms, often requiring high-performance processors or GPUs for complex computations.

- Application Layer: Most of us think only of this layer — where the outputs of the AI system are used.

Threats to AI systems can be divided into two categories — adversarial threats and operational failures.

Adversarial Threats

Adversarial threats are deliberate attempts to manipulate AI systems by introducing malicious inputs designed to deceive the model into making incorrect predictions or classifications. Data poisoning, for example, involves adversaries injecting malicious data into the AI's training set, causing the model to learn incorrect behaviors. Adversarial attacks skew input data to manipulate the AI's output, often bypassing security measures without detection.

Model theft poses another serious risk. With model theft, attackers reverse-engineer the AI's model, potentially revealing sensitive information. Privacy breaches involve exploiting the AI's data processing capabilities to unlawfully access or reveal private information.

Operational Threats

Operational failures refer to failures that occur due to limitations or vulnerabilities in the AI system's design, implementation, or deployment. Examples point to issues inherent to the AI system, such as:

- Security Vulnerabilities: Weaknesses in the system's security architecture that can be exploited by attackers to gain unauthorized access or disrupt operations.

- Bias and Fairness Issues: AI systems may exhibit biased behavior if trained on biased data, leading to unfair or discriminatory outcomes.

- Robustness and Reliability: AI systems may fail to perform reliably under different conditions or when exposed to unexpected inputs.

AI security aims to mitigate adversarial and operational risks. As with any application or cloud ecosystem, protection involves code to cloud security. With AI systems, it also involves securing the AI's training data, its learning process, and its output data.

By necessity, AI security must be proactive and evolve alongside AI technology. It requires a thorough understanding of the AI system, its data, and its vulnerabilities. Strategies include rigorous data validation, ensuring data integrity, implementing intrusion detection systems, and regularly auditing the AI's actions and decisions.

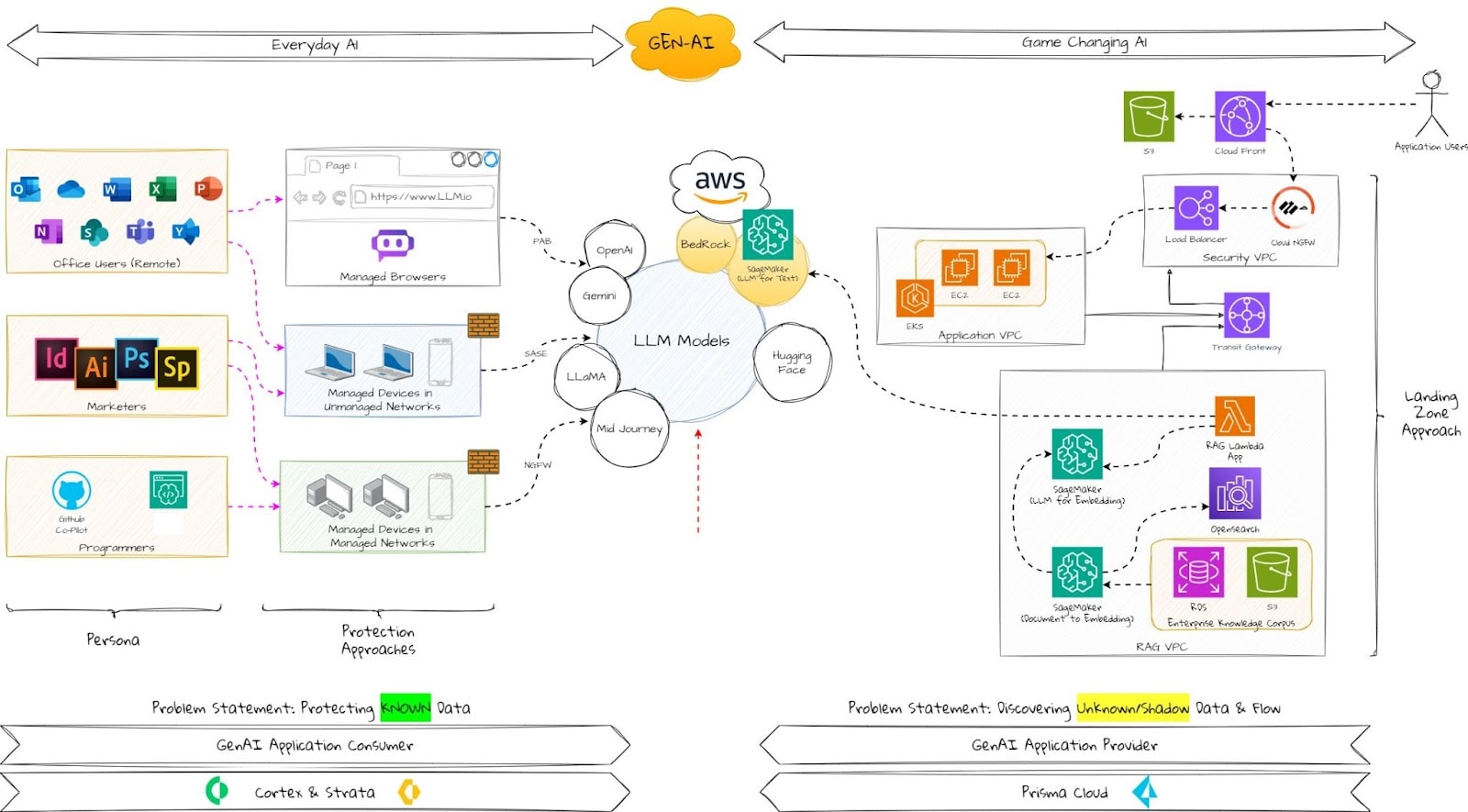

Figure 1: A nonintrusive and effective way to keep your data visible and secure.

Importance of AI Security

To handle the volumes of data and execute high-octane AI workloads has organizations turning to cloud services, increasingly adopting multicloud and hybrid cloud deployments. Virtual machines, cloud container services like Kubernetes, and extensive cloud datastores form the backbone of large language models (LLMs), retrieval augmented generation (RAG), and AI agents. Cloud-powered AI enables organizations to:

- Train AI models.

- Implement ChatGPT capabilities.

- Analyze massive datasets.

- Run large-scale AI deployments.

Organizations rely on multiple cloud service providers to develop, deploy, and consume AI products. Virtual machines, containers, and APIs are essential to AI innovation. To manage workloads and compute resources, in addition to isolating sensitive assets, dedicated cloud environments are needed for testing, staging, and production.

But while multicloud infrastructures may be necessary for AI systems, they add to the complexities of securing AI systems. The growing attack surface created by multicloud deployments introduces visibility gaps, policy inconsistencies, and disparate access controls — all of which open risk and opportunities for bad actors.

AI Innovation Means Data

Sensitive and proprietary datasets form the DNA of AI workloads. To train LLM and other AI models, organizations must create massive datastores of their most sensitive information. They leverage terabytes of data, usually hosted on public cloud services that contain:

- Intellectual property

- Business intelligence

- Supplier data

- Personal identification data

- Health information

We’re all familiar with the risks of a data breach. Losing employee or customer information, trade secrets, intellectual property, source code, operational information, business forecasts, and other sensitive data opens an organization to tremendous financial, reputational, and legal risks.

But the consequences of an attack extend beyond the organization. As AI algorithms gain autonomy in decision-making processes, corruption could impact human lives. In addition to exposing sensitive data, compromised AI systems could make flawed decisions or be manipulated to serve malicious purposes. The repercussions of security breaches might involve catastrophic outcomes in domains like healthcare, transportation, and cybersecurity.

Maintaining public trust in the safety and reliability of AI technologies hinges on security measures that safeguard AI models, training data, and runtime environments from threats. Proactive security efforts, rigorous testing, and continuous monitoring go far in mitigating AI security risks and fostering the responsible development and deployment of these powerful technologies.

The Scope of AI Security

The scope of AI security spans multiple dimensions, including the security of the data used by AI, the algorithms, and the infrastructure on which AI systems are deployed.

Data Integrity

Ensuring the accuracy and consistency of data throughout its lifecycle is paramount in AI systems. Any manipulation of data — whether at rest, in transit, or in process — can lead to flawed outcomes from AI decision-making processes.

Data Security

AI systems rely heavily on large datasets for training and operation. Ensuring the security of this data is paramount because it often includes sensitive or personal information. Data security measures include encryption, access control, and secure data storage. Proper anonymization of datasets is also critical to prevent de-anonymization attacks that could lead to privacy breaches.

Algorithmic Reliability

AI systems must perform reliably and predictably under a variety of conditions. Security measures must be in place to prevent scenarios where AI algorithms could be intentionally misled via inputs designed to exploit vulnerabilities in their logic. Reliability measures seek to fortify the logic of AI systems against attempts to exploit weaknesses, which could lead to erroneous outcomes or system failures.

User Privacy

Protecting the information of users interacting with AI systems is a critical concern, particularly in applications that handle sensitive personal data, such as personally identifiable data. Security practices must prevent unauthorized data access and ensure compliance with privacy laws and standards.

Operational Continuity

AI systems, particularly those in critical infrastructure, must be resilient to attacks that could disrupt operational functionality. This includes safeguarding against both external attacks and internal failures.

Addressing these domains requires a comprehensive approach that includes encryption practices, secure data storage and transfer, regular auditing of AI algorithms, and stringent access controls. For experts in high-tech cloud security, the integration of AI security into existing frameworks presents both a challenge and an opportunity to redefine what secure system operations mean in the era of intelligent technology.

Model Security

AI models can be vulnerable to attacks, such as adversarial attacks, model extraction, and model inversion attacks. These attacks aim to manipulate the model's behavior, steal the model's intellectual property, or reveal sensitive information from the training data.

Infrastructure Security

AI systems are often deployed on cloud platforms or on-premises servers, each with specific security considerations. This encompasses the physical security of servers, network security to prevent unauthorized data access or denial-of-service attacks, and application security to protect against vulnerabilities in the software that might be exploited.

Ethical Considerations and Governance

AI security isn't just about technological measures. It also involves the establishment of ethical guidelines and governance frameworks to ensure AI is used responsibly. This includes addressing issues such as bias in AI decision-making, the transparency of AI systems (often referred to as explainability), and the development of AI in a way that respects privacy and human rights. Developing applications with security in mind promotes responsible AI usage, fostering trust among users and stakeholders by ensuring that ethical standards are upheld.

Compliance and Standards

Several industries are governed by regulatory bodies that dictate how AI must be designed and used. The General Data Protection Regulation (GDPR) in the EU, for instance, poses complex requirements around data usage and consumer rights, particularly in regards to automated decision-making and data protection. When it comes to securing AI and ML systems, effective AI security will exceed guidelines and regulations.

AI Safety

AI safety aims to ensure that AI systems behave as intended, even in unexpected or edge cases, and don’t cause unintended harm. Techniques include safe exploration, reward modeling, and value alignment.

AI Security Challenges

The pace of AI innovation for many organizations is stretching them beyond their ability to confidently secure the AI projects promising to drive their growth. Attackers can exfiltrate and sell proprietary data or leverage it for ransomware. Bad actors and malicious insiders can inject tainted and malicious data into datastore, compromising AI outputs. AI security is both challenging and high stakes.

AI-Generated Code

The importance of AI security is underscored by the proliferation of AI-generated code to accelerate software development. While this AI use case offers myriad benefits, it introduces security risks and challenges.

AI models learn to generate code by training on existing software code. If this training data contains insecure or vulnerable code, the AI model will learn and propagate these vulnerabilities in the code it produces. Without proper security, GenAI code will significantly expand the attack surface with vulnerabilities that malicious actors can exploit.

Similarly, if the algorithms and techniques used to build and train an AI model contain security flaws, the AI model produced will likely be insecure. Here, too, attackers will find opportunities to manipulate the model's behavior or compromise the integrity of its outputs.

To avoid accelerating risks in production, organizations must ensure the security of AI-generated code and a comprehensive, continuously updated dataset that the AI model can learn from. Both of these require rigorous validation against security standards.

System Reliability

The issue of reliability in AI systems emanates from their need to operate effectively across variable and unpredictable conditions. AI-powered applications must maintain performance when confronted with diverse inputs, which may not have been present in the training data. Data drift — where the data's statistical properties change over time, leading to performance degradation or unexpected behavior that adversaries can exploit — heightens the problem.

Securing AI systems against such evolving conditions requires continuous monitoring and adaptation. The challenge lies in creating models that not only perform well on known data but also generalize effectively to new, unseen scenarios. This is tricky because the reliability of AI systems is tested by adversarial attacks, where slight, often imperceptible, alterations to inputs are designed to deceive the system into making errors. The resulting vulnerabilities must be identified and mitigated to prevent malicious exploitation.

To address these challenges, AI systems are subjected to rigorous validation against a range of test cases and conditions, often augmented by adversarial training techniques. Security teams must implement regular updates and patches in response to newly discovered vulnerabilities and adapt the AI models to maintain consistency and reliability. The goal is to build resilience within AI systems, enabling them to resist attacks and function reliably, regardless of changes in their operational environment.

Complexity of AI Algorithms

The complexity of AI algorithms stems from their layered architectures and the vast amounts of data they process, leading to intricate decision-making pathways that aren’t easily interpreted by humans. This lack of interpretability makes identifying vulnerability within them daunting.

Complex AI models often operate as "black boxes," with their internal workings obscured even to the developers. Without clear insight into every decision path or data processing mechanism, pinpointing the location and nature of a security vulnerability can be like finding a needle in a haystack. Opacity makes it challenging to conduct thorough security audits or to ensure that the system will behave securely in every condition — especially when confronted with novel or sophisticated attacks.

The adaptive nature of AI algorithms, designed to learn and evolve over time, ups the ante, given that they can introduce new vulnerabilities as they interact with changing data and environments. Security measures must not only address known weaknesses but also anticipate and mitigate future threats that could exploit potential vulnerabilities gained from future learning. Their dynamic nature requires a dynamic approach to security, where measures are continuously updated and tested against the evolving behaviors of the AI systems they protect.

Insufficient Testing Environments

AI systems require comprehensive testing against an array of attack scenarios to ensure resilience, but creating environments that mimic real-world conditions with fidelity is difficult. Testing environments need to evolve to accommodate the latest attack vectors and unpredictable nature of adversarial tactics. Deficiency in this area can lead to AI systems being deployed with untried vulnerabilities.

Ensuring robustness against such threats demands dynamic and sophisticated testing environments that can adapt to the latest threat intelligence and provide thorough validation of an AI system's security measures.

Lack of Standardization

Without universally accepted frameworks or guidelines, organizations and developers face uncertainty in implementing security protocols for AI systems. The lack of consensus can lead to fragmented approaches, where each entity adopts disparate security measures. Variability complicates collaboration, interoperability, and the assessment of AI systems' security, making it challenging to ensure a consistent security posture across the industry.

Interoperability Issues

The diversity of AI models, data formats, and platforms can lead to incompatibilities, creating friction in integrating and orchestrating these systems, as well as expanding the attack surface. Consider that each component's unique vulnerabilities may be compounded when linked, offering multiple vectors for exploitation.

Interoperability challenges escalate in environments that span diverse infrastructures, such as hybrid clouds, where AI systems must operate seamlessly. Ensuring secure data exchange and consistent functionality across heterogeneous landscapes requires robust technologies (i.e., DSPM), standardized interfaces and protocols. Without them, security gaps may emerge, leaving systems susceptible.

Scalability of Security Measures

The scalability of security measures becomes a formidable challenge as AI systems expand. As AI infrastructures grow in complexity and volume, ensuring that security protocols keep pace is not simply a matter of increasing resources in a linear fashion. Security mechanisms must evolve to handle the greater quantity of data and interactions — as well as the increased sophistication of potential threats that come with larger attack surfaces.

Data, of course, requires intricate access controls, constant monitoring, and advanced threat detection capabilities. Traditional security solutions designed for smaller, less complex systems may become inadequate, as they lack the agility to adapt to the high-velocity, high-volume demands of large-scale AI operations. What’s more, the integration of new nodes or services into the AI ecosystem can introduce unforeseen vulnerabilities that require assessment and continuous adjustments to security measures.

AI Security Solutions

The sophistication and scale of AI-powered attacks overwhelm current tools. What’s needed is automated intelligence that can continuously analyze the cloud stack, precisely connect insights from across the environment, and rapidly identify the greatest risks to the organization.

Intelligent & Integrated CNAPP

A comprehensive cloud-native application protection platform (CNAPP) with ML-powered threat detection capabilities (UEBA, network anomaly detection) and AI risk modeling and blast radius analysis provides an essential foundation for AI security. With AI-driven analytics, security teams can see the impact of compromise along with the most efficient remediation workflow recommendations.

Integrating AI-SPM within the CNAPP enhances the platforms' capabilities, enabling it to address the unique challenges of deploying AI and GenAI at scale while helping reduce security and compliance risks.

AI Security Posture Management (AI-SPM)

AI Security Posture Management (AI-SPM) provides visibility into the AI model lifecycle, from data ingestion and training to deployment. By analyzing model behavior, data flows, and system interactions, AI-SPM helps identify potential security and compliance risks that may not be apparent through traditional risk analysis and detection tools. Organizations can use these insights to enforce policies and best practices, ensuring that AI systems are deployed in a secure and compliant manner. AI-SPM goes beyond traditional cloud security measures to focus on the vulnerabilities and risks associated with AI models, data, and infrastructure. The overview that follows explains how AI-SPM works.

Visibility and Discovery

AI-SPM begins with creating a complete inventory of AI assets across an organization's environment. This includes identifying all AI models, applications, and associated cloud resources. The goal is to prevent shadow AI — AI systems deployed without proper oversight — which can lead to security vulnerabilities and compliance issues.

Data Governance

AI systems rely heavily on data for training and operation. AI-SPM involves inspecting and classifying the data sources used for training and grounding AI models to identify sensitive or regulated data, such as PII, that might be exposed through model outputs or interactions.

Risk Management

AI-SPM enables organizations to identify vulnerabilities and misconfigurations in the AI supply chain. This includes analyzing the entire AI ecosystem — from source data and reference data to libraries, APIs, and pipelines powering each model. The goal is to detect improper encryption, logging, authentication, or authorization settings that could lead to data exfiltration or unauthorized access.

Runtime Monitoring and Detection

Continuous monitoring of AI systems in operation is a crucial aspect of AI-SPM. This involves tracking user interactions, prompts, and inputs to AI models (especially large language models) to detect misuse, prompt overloading, unauthorized access attempts, or abnormal activity. It also includes scanning model outputs and logs to identify potential instances of sensitive data exposure. AI-SPM alerts security teams to AI-specific threats like data poisoning, model theft, and proper output handling and provides guidance on remediation steps.

Compliance and Governance

AI-SPM helps organizations ensure their AI systems comply with legal requirements. This includes implementing strict controls around AI usage and customer data fed into AI applications. And, as regulations around AI evolve, AI-SPM can keep organizations ahead of compliance requirements by embedding privacy and acceptable use considerations into the AI development process.

AI Supply Chain Security

AI-SPM addresses the security of the entire AI supply chain, including third-party components, libraries, and services used in AI development and deployment. This helps prevent vulnerabilities that could be introduced through these external elements.

Threat Detection and Mitigation

AI-SPM incorporates specialized security controls tailored to AI assets. It maintains a knowledge base mapping AI threats to applicable countermeasures, allowing for proactive risk mitigation.

Secure Configuration Management

AI-SPM tools help enforce secure configuration baselines for AI services. This includes built-in rules to detect misconfigured AI services that could lead to security vulnerabilities.

Attack Path Analysis

Advanced AI-SPM solutions offer attack path analysis capabilities. This feature helps identify potential routes that attackers could exploit to compromise AI systems, allowing organizations to proactively address these vulnerabilities.

Integration with MLSecOps

AI-SPM is a critical component of the broader MLSecOps (machine learning security operations) framework. It supports activities during the operation and monitoring phases of the ML lifecycle, ensuring that security is embedded throughout the development and deployment of AI systems.

Alignment with AI Risk Management Frameworks

AI-SPM aligns with and supports various AI risk management frameworks, such as the NIST AI Risk Management Framework, Google's Secure AI Framework (SAIF), and MITRE's ATLAS. This alignment helps organizations implement comprehensive AI governance strategies.

By addressing these dimensions, AI-SPM provides organizations with a holistic approach to securing their AI assets. It enables them to maintain the integrity, confidentiality, and availability of AI systems while ensuring compliance with regulatory requirements.

AI Security Best Practices

AI security best practices can help organizations protect their AI systems from threats and ensure their reliable and secure operation..

- Design AI systems to be explainable and transparent, so that their decisions can be understood and audited. AI security begins with building secure and reliable AI systems.

- Incorporate security practices throughout the entire AI development lifecycle — from data collection and model training to deployment and monitoring.

- Use secure and reliable data for training AI models, ensuring it represents the problem space and is free from biases or errors that could affect the model's performance.

- Protect the data used for training and testing AI models. Encrypt sensitive data, control access to data, and regularly auditing data usage. Implement techniques such as differential privacy to protect the privacy of individuals whose data is used in AI models.

- Implement formidable security measures in the design and development of AI algorithms to ensure the algorithms are free from vulnerabilities attackers could exploit.

- Use threat modeling, identifying potential threats and vulnerabilities in the AI system and designing measures to protect against these threats.

- Rigorously test AI-generated code for vulnerabilities before deploying it in production environments.

- Regularly test AI models for vulnerabilities before deploying them in production environments. Validate the performance of AI models under different conditions.

- Regularly audit AI systems to detect anomalies or changes in performance that could indicate a security issue.

- Employ human oversight to monitor and evaluate AI decisions for accuracy, fairness, and ethical considerations. Human oversight helps to mitigate risks associated with autonomous decision-making.

- Establish an incident response plan that includes identifying the issue, containing the incident, eradicating the threat, recovering from the incident, and learning from the incident.

- Stay up-to-date with the latest research and developments, and continuously update and adapt security measures as needed.

Integrating AI security into the software development lifecycle (SDLC) ensures that security measures are embedded from the design phase. This ticks all the boxes — implementing secure coding practices, conducting vulnerability assessments, and adhering to established security frameworks like OWASP’s guidelines. A secure SDLC minimizes the risk of introducing security flaws during the development of AI applications.

AI Security FAQs

In the context of AI and machine learning, differential privacy helps to ensure the model doesn't remember specific details about the training data that could leak sensitive information, while still allowing it to learn general patterns in the data.

With visibility, organizations can identify potential risks, misconfigurations, and compliance issues. Control allows organizations to take corrective actions, such as implementing security policies, remediating vulnerabilities, and managing access to AI resources.

In the context of artificial intelligence and machine learning, explainability refers to the ability to understand and interpret the decision-making process of an AI or ML model. It provides insights into how the model derives its predictions, decisions, or classifications.

Explainability is important for several reasons:

- Trust: When users can understand how an AI system makes decisions, they’re more likely to trust its output and integrate it into their workflows.

- Debugging and Improvement: Explainability allows developers to identify potential issues or biases in the AI system and make improvements accordingly.

- Compliance and Regulation: In certain industries, such as finance and healthcare, it’s crucial to be able to explain the rationale behind AI-driven decisions to comply with regulations and ethical standards.

- Fairness and Ethics: Explainable AI ensures that AI systems are free from biases and discriminatory behavior and promotes fairness and ethical considerations in AI development.

Various techniques and approaches can achieve explainability in AI systems, such as feature importance ranking, decision trees, and model-agnostic methods like Local Interpretable Model-agnostic Explanations (LIME) and Shapley Additive Explanations (SHAP). These techniques aim to provide human-understandable explanations for complex AI models, such as deep learning and ensemble methods.

An algorithm is a set of predefined instructions for solving a specific problem or performing a task, operating in a rigid and static manner without learning from data. In contrast, an AI model is a mathematical construct that learns from training data to make predictions or decisions, adapting and improving over time. Algorithms are ideal for well-defined tasks with clear rules, while AI models excel in handling uncertainty and complexity, such as in image recognition or natural language processing. Together, algorithms help process data, while AI models leverage this data to learn and make informed predictions.

An AI model typically relies on multiple algorithms working together throughout the training and inference phases of its lifecycle.

Training Phase

- Optimization algorithms, like gradient descent, adjust the model's parameters to minimize error and improve accuracy.

- Data preprocessing algorithms clean and prepare the data for training, handling tasks like normalization, feature extraction, and data augmentation.

- Model architecture algorithms define the structure of the AI model, such as the layers and connections in a neural network.

Inference Phase

- Prediction algorithms process new input data through the trained model to generate predictions or classifications.

- Post-processing algorithms refine the model's output, such as converting raw predictions into meaningful formats or applying thresholds for classification.

Additional Algorithms

- Evaluation algorithms assess the model's performance using metrics like accuracy, precision, recall, and F1 score.

- Deployment algorithms handle the integration of the AI model into real-world applications, ensuring efficient and scalable operation.

Managing the AI supply chain is essential for ensuring the security and integrity of AI models and protecting sensitive data from exposure or misuse.

AI attack vectors are the various ways in which threat actors can exploit vulnerabilities in AI and ML systems to compromise their security or functionality. Some common AI attack vectors include:

- Data poisoning: Manipulating the training data to introduce biases or errors in the AI model, causing it to produce incorrect or malicious outputs.

- Model inversion: Using the AI model's output to infer sensitive information about the training data or reverse-engineer the model.

- Adversarial examples: Crafting input data that is subtly altered to cause the AI model to produce incorrect or harmful outputs, while appearing normal to human observers.

- Model theft: Stealing the AI model or its parameters to create a replica for unauthorized use or to identify potential vulnerabilities.

- Infrastructure attacks: Exploiting vulnerabilities in the cloud environments or data pipelines supporting AI systems to gain unauthorized access, disrupt operations, or exfiltrate data.

By monitoring AI models, data pipelines, and associated infrastructure, real-time detection systems enable prompt detection of abnormal activities — unauthorized access attempts, signs of external tampering, for example. Rapid response capabilities, in addition to automated remediation measures and incident response plans, allow organizations to mitigate security risks and maintain the trustworthiness of their AI systems.