As businesses adopt artificial intelligence (AI) and embark on the evolving landscape of Large Language Models (LLMs), organizations face the challenge of securing these powerful models against potential threats. From prompt injections and data leakage to malicious URLs and data poisoning, the security risks surrounding AI deployments are diverse and complex. As businesses strive to leverage the transformative potential of generative AI, it becomes crucial to ensure the safety and integrity of these systems.

Today, we are excited to showcase how Palo Alto Networks AI Runtime Security API Intercept leverages NVIDIA NeMo Guardrails to help security teams and AI application builders more easily deploy and configure security controls for a breadth and depth of coverage across risk areas.

Securely Develop & Deploy AI Applications

Using AI Runtime Security API Intercept with NVIDIA NeMo Guardrails addresses many LLM security challenges, enabling organizations to deploy and operate AI workloads with greater confidence.

NeMo Guardrails from NVIDIA

NeMo Guardrails lets developers define, orchestrate and enforce AI guardrails in LLM applications. Guardrails (or “rails” for short) are specific ways of controlling user interaction and behavior of LLM applications, such as enforcing avoidance of conversation of politics, responding in a particular way to specific user requests, following a predefined dialog path, using a particular language style, extracting structured data, and more.

AI Runtime Security API Intercept from Palo Alto Networks

AI Runtime Security API Intercept is an adaptive, purpose-built solution that protects every component in the AI ecosystem such as applications, models, agents and their data from AI-specific threats. This service is a REST API designed for customers who want to secure their AI apps and agents by implementing security inline with their application code.

Comprehensive LLM Protection

Together, these two solutions enable AI application builders to easily add protection for their LLMs from a comprehensive list of attacks and misuse.

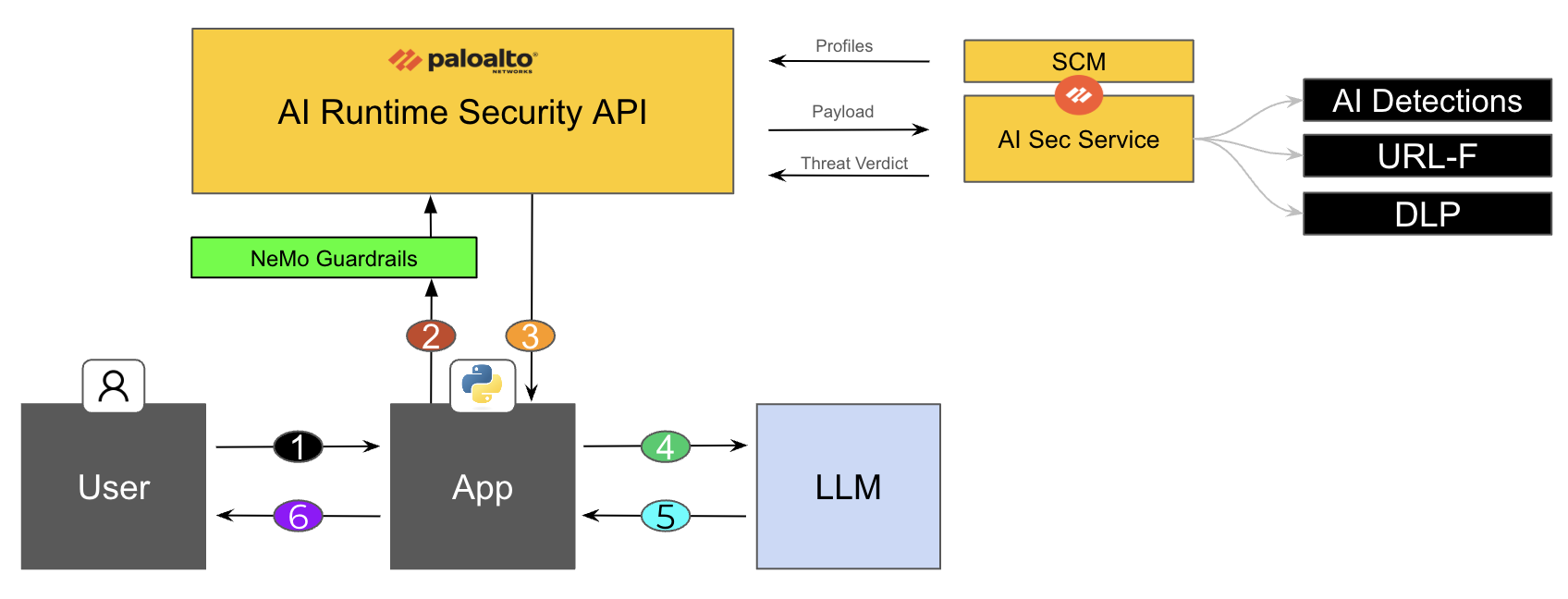

Figure 1: Architecture diagram showing order of operations with NeMo Guardrails and AI Runtime Security API Intercept.

Simple Path to Defense-in-Depth for LLMs

NeMo Guardrails includes ready-to-use guardrail configurations that can be customized and extended through a rich ecosystem of open community, third-party and NVIDIA rails and models, including NVIDIA NeMo Guardrails NIM microservices.

Implementing a custom action in NeMo Guardrails to call the Palo Alto Networks AI Runtime Security API introduces another layer of enterprise-grade protection that centralizes layer seven enforcement for AI applications. Further, this allows developers to harness the breadth and depth of Palo Alto Networks’ AI detection portfolio powered by Cloud-Delivered Security Services (CDSS).

At a high level, the combination of the proactive, pattern‑based defenses of NeMo Guardrails with the dynamic, real‑time analysis provided by Palo Alto Networks’ API Intercept establishes an enhanced, layered security strategy that not only blocks well-known attacks, but also mitigates emerging threats targeting AI runtime behavior.

6 Ways AI Runtime Security API Intercept & NVIDIA NeMo Guardrails Help Secure AI Applications

Let’s delve into specific use cases where the power of the AI Runtime Security API Intercept and NVIDIA NeMo Guardrails integration stands out.

- Defense-in-depth: By combining semantic guardrails with an independent threat detection service, developers gain a critical second line of defense against prompt injection, data leakage, and malicious inputs.

- Ease of deployment: Inserting AI Runtime Security API Intercept as a custom action within NeMo Guardrails enables application and security teams to centralize enforcement of AI security policies without having to implement controls across all instances of application code.

- Preventing Prompt Injections: Bolster protection with PANW’s industry-leading detection efficacy, with 24 types of prompt injections across 8 languages, in tandem with NeMo Guardrails’ flexible ‘Disallowed’ list and Jailbreak Detection NIM microservice.

- Enforcing Data Privacy: Utilize PANW’s API-based threat detection to block sensitive data leaks during AI interactions and NeMo Guardrails to monitor and control data flow to and from an LLM. This enables organizational compliance with data protection and privacy regulations like GDPR and CCPA, preventing the inadvertent exposure of sensitive data.

- Malicious URL Detection: Safeguard AI applications and agents from malicious URL and IP crawling, protecting integrity of functional workflows.

- Detecting data poisoning: Identify contaminated training data before fine-tuning to mitigate rogue behavior.

"Our collaboration with NVIDIA NeMo Guardrails bolsters enterprise-grade security that allows our customers to deploy AI applications confidently, knowing that their deployments are protected by best-in-class security measures."

- Rich Campagna, SVP, Product Management

The Power of AI Runtime Security API Intercept & NVIDIA NeMo Guardrails

Security teams and AI application builders that deploy AI Runtime Security API Intercept have access to powerful, customizable, and most importantly, unobtrusive controls. NeMo Guardrails and AI Runtime Security can be integrated into existing or new environments within minutes.

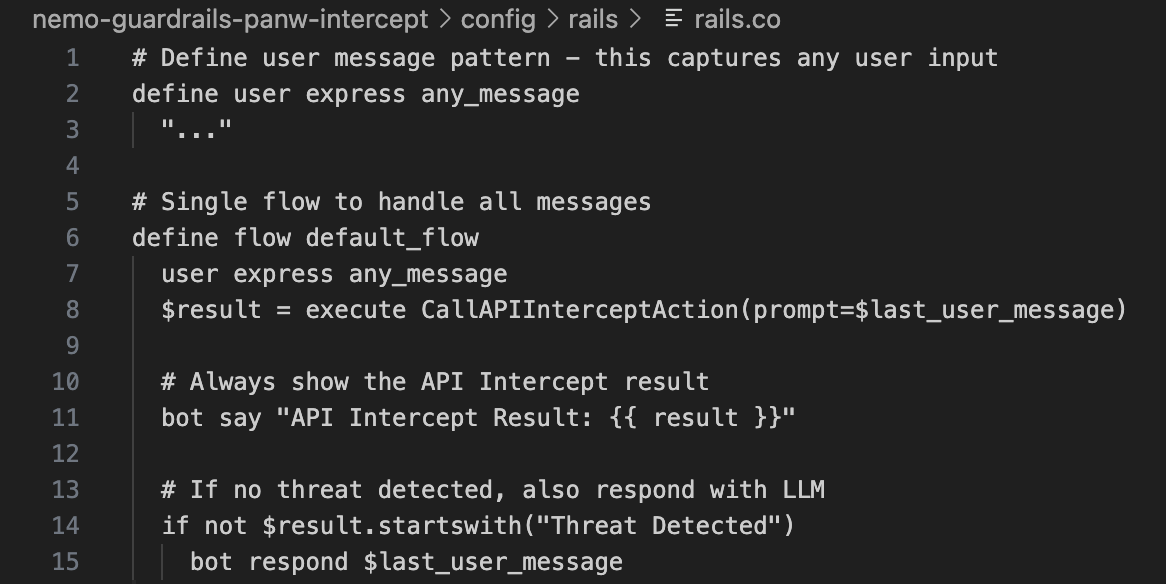

The following screenshot illustrates what the control flow works like during execution. Once a user enters a prompt, NeMo Guardrails will evaluate if the input matches any of its existing guardrails. If the input matches a guardrail definition, the prompt is blocked and NeMo Guardrails handles the response back to the user or application. If the input does not match a guardrail, the prompt is inspected by AI Runtime Security API Intercept to determine whether to block or allow the request.

Figure 2: Chatbot flow with NeMo Guardrails and AI Runtime Security API Intercept configured.

NeMo Guardrails and AI Runtime Security API Intercept provide developers and security teams with layered defense mechanisms so they can more confidently deploy LLMs in production.

Palo Alto Networks & NVIDIA Help Make AI Security Accessible

NVIDIA and Palo Alto Networks are focused on making AI security accessible, flexible, and easy for developers to consume regardless of how they are building applications. NeMo Guardrails and Palo Alto Networks AI Runtime Security API Intercept are highly customizable and robust security solutions for security LLMs, agentic workflows and a variety of other use cases.

We’re excited to contribute to the NeMo Guardrails project and look forward to our continued collaboration with NVIDIA to solve the AI security challenges organizations face as they find new and innovative ways to harness the power of LLMs, AI applications, and agentics to increase efficiencies.

For directions on how to get started with AI Runtime Security and NeMo Guardrails in your own home environment, check out the GitHub repository for source code and setup instructions.